I have been working on medical AI, in some form or other, for most of my adult life. For the past 12 months I have taken the opportunity to pause from racing forwards with my own start-ups and to look again, partly as a researcher, at the tools at my disposal and their intended applications. What I have seen worries me.

Part of my efforts to improve things, have taken the form of a number of peer-reviewed scientific articles. A few more such articles are still under review or exist only as work-in-progress. Today I want to summarise the 5 greatest problems which I see facing medical AI systems. For some of them I think that there are clear mitigations. For others, I suspect that we will need to rethink the entire system.

Non-stationarity of the data

Clinical standards change over time. This is a fact.

In the 1990’s there was a scare about rising numbers of people being diagnosed with Autism. It turned out that the clinical diagnostic standards (DSM) had been changed in roughly 1992. This broadened the acceptance criteria and led to most of the increase in diagnoses.

I have seen the same issue in a recent data-driven project focused on pregnancy. The standard-of-care, meaning how doctors are supposed to act including the definition of at-risk, for pre-eclampsia, changed multiple times in recent years. I can see these changes in the data. Different women are being considered at-risk; they are being called in for enhanced monitoring at different periods in their pregnancies; and, as a result of this altered surveillance, they are receiving different treatments from what they would have received had the standards not changed. This means that the population being monitored has changed, and that some of the values which we observe have changed their distributions. This is a huge problem for a data-driven model.

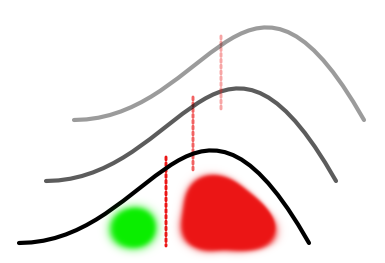

The machine learning literature typically refers to this problem under the heading concept drift or semantic drift. This is a polite way of saying that words and concepts change their meaning over time. The mitigation for this phenomenon is either detection, followed by retraining, or segmenting data based on inferred meaning and then modelling them separately.

These are the correct engineering answers to this problem. But how do you detect in practice? There is a necessary lag to detection. How will patients be treated before the lag expires. Retraining, either on a new data set or on segmented data streams, requires sufficient training examples. What happens until this training data, following the new practices, has been gathered? How will these examples even be acquired if the clinical practice has adapted to allowing the machine automate the process?

The hardest issue of all here is that the bleed-over of changes in data distributions is not a-priori predictable. Returning to the pregnancy example, the body-weight, along with a lot of biochemical parameters, of the women changes enormously over the period of a pregnancy. Changing the gestational week in which they are monitored will massively alter the characteristics of all of these parameters fundamentally altering the basis for any predictive model.

Further, all of this is inherently recurrently connected. A prediction from an algorithm drives care, but underlying diagnostic variables are drifting, leading to different from expected outcomes which leads to an unpredictable response on the part of clinicians. Experienced clinicians who understand the algorithms may, for example, try to restore the predictive power of the algorithm by recording health parameters according to the old standard rather than the current one, which will in turn lead to problems in training the updates for the algorithm.

Adaptive AI

Adaptive AI is a form of artificial intelligence which is not just a form of automation but which can adapt to the environment in which it is deployed. I typically use digital assistants such as Siri, Google Assistant, or Alexa as examples which people might know of here. These tend to adapt, at least in theory, to their user’s voice over time. This means that they become easier to use and have better ability to accurately recognise the user’s voice.

In the medical context it is less clear what a truly adaptive AI will look like. The great promises are essentially (i) adaptation to statistical characteristics of the local patient population, and (ii) adaptation to the clinician. The former might recognise that incidence of cancer is above average in this neighbourhood and thus not artificially exclude some patients from a cancer-risk stratification based on some normalisation property (e.g. model calibration). The adaptation to the doctor might be able to offer the doctor their preferred treatment for a particular indication rather than always defaulting to the most commonly prescribed treatment nationally. This latter is not that dissimilar to the autocomplete feature in many word processors and also on email platforms.

Adaptive AI is currently under consideration at the FDA. Their discussion document first posted in January 2020 and recently updated (unfortunately at the same URL) to an Action Document makes the importance of adaptive AI abundantly clear. It makes up fully one fifth of the plan!

Adaptive AI poses, for me, a potentially crazy level of risk.

I spent years building, simulating and studying large-scale recurrently connected neural networks which were able to learn. Anything which has a closed-loop learning system can just as easily learn bad habits as good.

What is to stop the AI learning to reproduce all of the bad habits of the local physician?

The solution for me, to this particular problem, I have already published in my preprint On Regulating AI in Medical Products. You have to place hard limits on what can be learned.

This unfortunately will greatly limit the promises of adaptive AI. But there is no alternative which can be easily described.

If you really want to try out more complex solutions, you can try the following:

You first need to place bounds on what can and cannot be learned. But now they can be soft bounds rather than hard. And in a second step, an external module which monitors baseline performance should be required. If the monitor allows it then the learning module can extend its bounds.

At a later point in development you might even consider updating the monitoring module. At that point, it will be vital that updates to the learning model must not be synchronous with updates to the monitor module. For reward-learning aficionados it is useful to map this to an actor-critic framework, however without the necessity for reward learning.

I have actually designed such a system. I did it a number of years ago but have never found a use case where I have needed to implement it. The conception is Bayesian and involves a closed feedback loop. Sam Gerschman, at Harvard, worked on similar problems at almost the same time as me. His solutions are published in the scientific literature on cognition, but will not be translatable for the layperson to this particular domain application.

This is technically possible. But, having led a few engineering teams now, I would not like to see it coming to the clinic any time soon.

Real-World Data (RWD)

Real-world data (RWD) is data which is already generated today in some real-world process. In medicine it might be the data which your family doctor enters into their records following your visit.

RWD has, in recent years, been hyped as one of the great combined hopes for both (i) pharma, and for (ii) medical AI.

It is the solution to the problem of, “We don’t have enough data.”

But getting data is rarely the simple solution, it appears to be, to the not enough data problem. Usually you don’t have the right data.

RWD has two major problems which make it largely unusable for many of the most important tasks. It has:

- lots of holes

- missing outcomes

The holes are recording gaps. You went to one doctor for 5 years, then you studied abroad for a few years, and then you returned. This just looks like a gap in the record. You might have been incredibly healthy in that period. Or you might have given birth and even undergone a serious cancer treatment.

Defenders of RWD as a solution will quickly point out, (i) just look at shorter time horizons, and (ii) across 100,000’s of patients this barely even matters.

Both of these answers are only correct in micro-circumstances. The example of studying abroad is only an example. There are many other time horizons which are missing from the data set. And the missing data is repeated with many, varying patterns across the 100,000’s of patients. The current methods, used in mathematical analysis and predictive modelling, tend to obscure these facts. But to safely deploy a system built upon such data will be to expose these problems and lead to years of development work to overcome them.

The missing outcomes issue is even more serious. Here very few people will disagree with me. When a patient never returns to their doctor, we never find out whether they were cured or killed by the prescribed treatment. This is a caricature but it is bizarrely accurate in terms of representing the problem.

RWD will be immensely useful for scientific R&D, for decades to come, but it is unlikely to lead to many real-world predictive models.

False positive rates

The toolset for building predictive models is extremely advanced. Within reason, we can build a predictor for almost any outcome on any data set.

Sounds good, so what’s the problem?

The problem is that not all associations are real.

Correlations between data points may be real or they may be a result of a specific instance of random sampling from the data. To make things even more complicated, true causal links – which are necessary for a real-world medical AI application – can have zero correlative structure despite being formally linked. There is no solution to this conundrum apart from having deep domain knowledge of actual cause-and-effect.

So what does this mean?

If you give me a decent sized data set, with a bit of noise in it, then I can build a predictive model for almost anything based on that data set. And, given enough attempts to do trial-and-error, I can even get identical performance on the hold-out set.

This is usually the point where researchers are tempted to use analogy to multiple hypothesis testing in statistics. I want to resist that narrative however. For one think, I have a been kicking around an idea for a scientific paper, for a few years, which basically explains that this is a false analogy for ML thanks to correct ML best-practices.

So let’s ignore the temptation of that analogy. Just consider my two points instead.

The power of ML tools is so great that if an association can be drawn, true or otherwise, then we can likely find / build it.

This pushes a huge responsibility onto the shoulders of the ML engineer. They now become the guarantor that they followed best-practices sufficiently well that no relevant slippages occurred.

This single point of failure approach is not acceptable for medical applications. In contrast, the drug development process requires multiple levels of congruent evidence before a drug candidate can first be trialed in a human. And that in turn, is only the first of many steps before the drug comes to market.

Diminishing rate of returns

I have gotten in trouble with academic peer-reviewers in the past for making the following statement:

ML is about finding the strongest signals and then building a product around them.

Similarly to the decline of the blockbuster drug in pharma, we have a diminishing returns issue in medical AI. We are able to detect and exploit the most prevalent signals. These are indications which are widespread in the community and have accessible biomarkers.

Interestingly, in this case we can describe the problem much easier than the pharma issue. That is because the pharma problem is mired in issues of the biological unknown. There is always the potential that a new discovery will solve the biological problem. However here, we are planning digital solutions and the technical approach is well understood.

ML is a machine which is best used at scale. So the ML expert is always looking at the dimension, beyond the current project, of what we can do next. And that is where things do not look so good for medical AI.

The vast majority of human diseases lie outside of the realm of common indications with accessible biomarkers. What about (relatively) rare diseases? In the case of underrepresented diseases, to amass sufficient data to extract a similarly sized signal it costs exponentially more in terms of both money and acquisition efforts. And inaccessible biomarkers? Then you have to substitute biomarkers which are poor correlates instead. This diminishes signal, thus again requiring more data. Often these issues are not solvable.

Today the best possible approach is to carefully examine data sets for signal, to build products accordingly, and to carefully plan roadmaps to take account of the accessibility of biomarkers, their correlation with indications, and the multiplicity of alternative approaches available in a neighbouring indication or biomarker subset.

Solution: paradigm shift?

That is how we have solved these problems throughout history.

- Reimagine the technical approach.

- Reimagine how we apply the technology (think about auto-suggest example).

However, I am not such a tech-bro that I believe we can always invent our ways out of trouble. For example, I would rather not bet the future of humanity on our ability to invent a solution to climate change. And I can see in the case of medical AI enormous potential for us to continue to plough untold resources into the wrong directions.

I do see promise in both of these approaches. And, I will continue to blog about them. I continue to work in this space.

The first step, though, is to recognise that you have a problem.